Opened just days ago to those who preordered the game, today we have a beta copy of Destiny 2 on hand for a heap of GPU benchmarking. Although the PC retail release won't be until late October, this seemed like a great opportunity to see what kind of hardware the game is likely to require.

We recognize that performance is subject to change by the time the title launches, but it's worth noting that AMD and Nvidia released driver updates about a week ago to better support Destiny 2 ahead of the beta and both Radeon and GeForce graphics cards seem to run fine so far.

Our Core i7-7700K test system was clocked at 4.9GHz, though as a bit of a spoiler, we found that Destiny 2 played well with cheaper processors as well. We also looked at the Ryzen 5 1600 and will compare those numbers to the Core i7-7700K later.

For now we have 30 GPUs to focus on, 17 of which are from the current generation lineup with the other 13 being from last season. All of the cards were tested at 1080p, 1440p and 4K using Destiny 2's 'high' quality preset, which is the second highest quality preset.

The maximum quality preset – called 'highest' – completely smashes performance because of MSAA being enabled. Seeing as I didn't really notice a difference between MSAA and SMAA, I opted for the second-highest quality preset ('high') which uses the latter anti-aliasing method.

Since you can't save your progress during the single-player tutorial/intro, I only tested the first 60 seconds of the game. After running it more than 90 times I can safely say that I have it memorized, though this approach was a massive pain because we had to exit the game after every single run to reset everything. I hope when the game is officially released we can find a significantly more efficient testing method.

Also note that our plans to benchmark the multiplayer section of Destiny 2's beta were abandoned early on because it took way too long to get into a game. Anyway, let's get to some numbers...

Benchmark Time

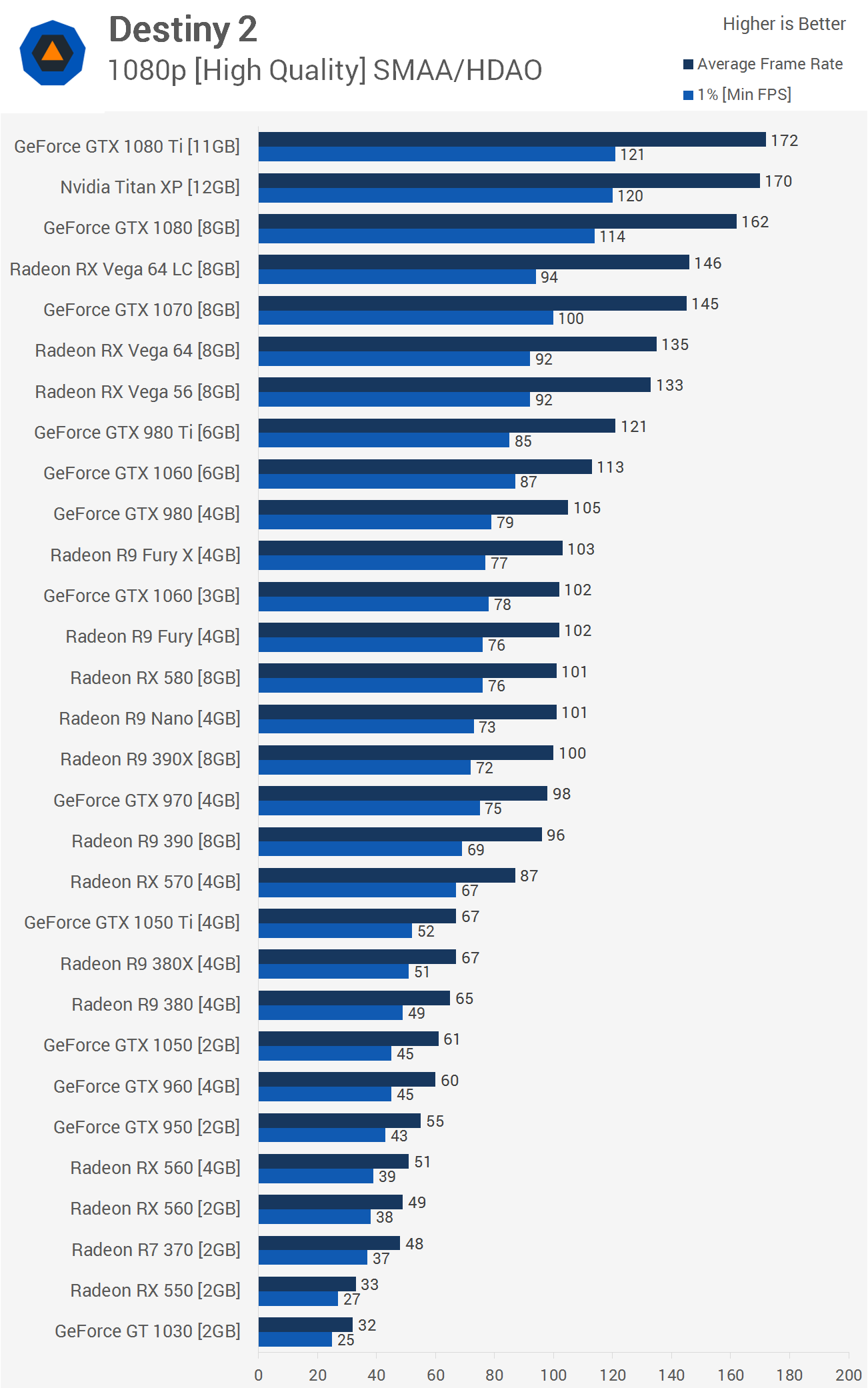

First up are the 1080p results. As you can see there are many cards capable of delivering between 96 and 105fps on average and plenty more that are good for 60fps or better.

Ignoring the high-end gear for now as we're only at 1080p, we find that to keep things above 60fps at all times, gamers will require an RX 570 or GTX 1060 3GB and frankly those are some pretty mild demands. For now Nvidia has a clear advantage in this title, at least at lower resolutions and we see this when comparing GPUs such as the RX 580 and GTX 1060.

Looking at the previous generation GPUs we see they have no trouble maintaining well over 60fps at all times, as demonstrated by the Radeon R9 390 and GeForce GTX 970.

Even the R9 380 and GTX 960 do well, never dipping below 60fps. It's interesting to see that the Fury series wasn't really able to pull ahead of the R9 390X, not to mention that it trailed the GTX 980 and got completely stomped by the GTX 980 Ti. Fine wine ain't working here folks.

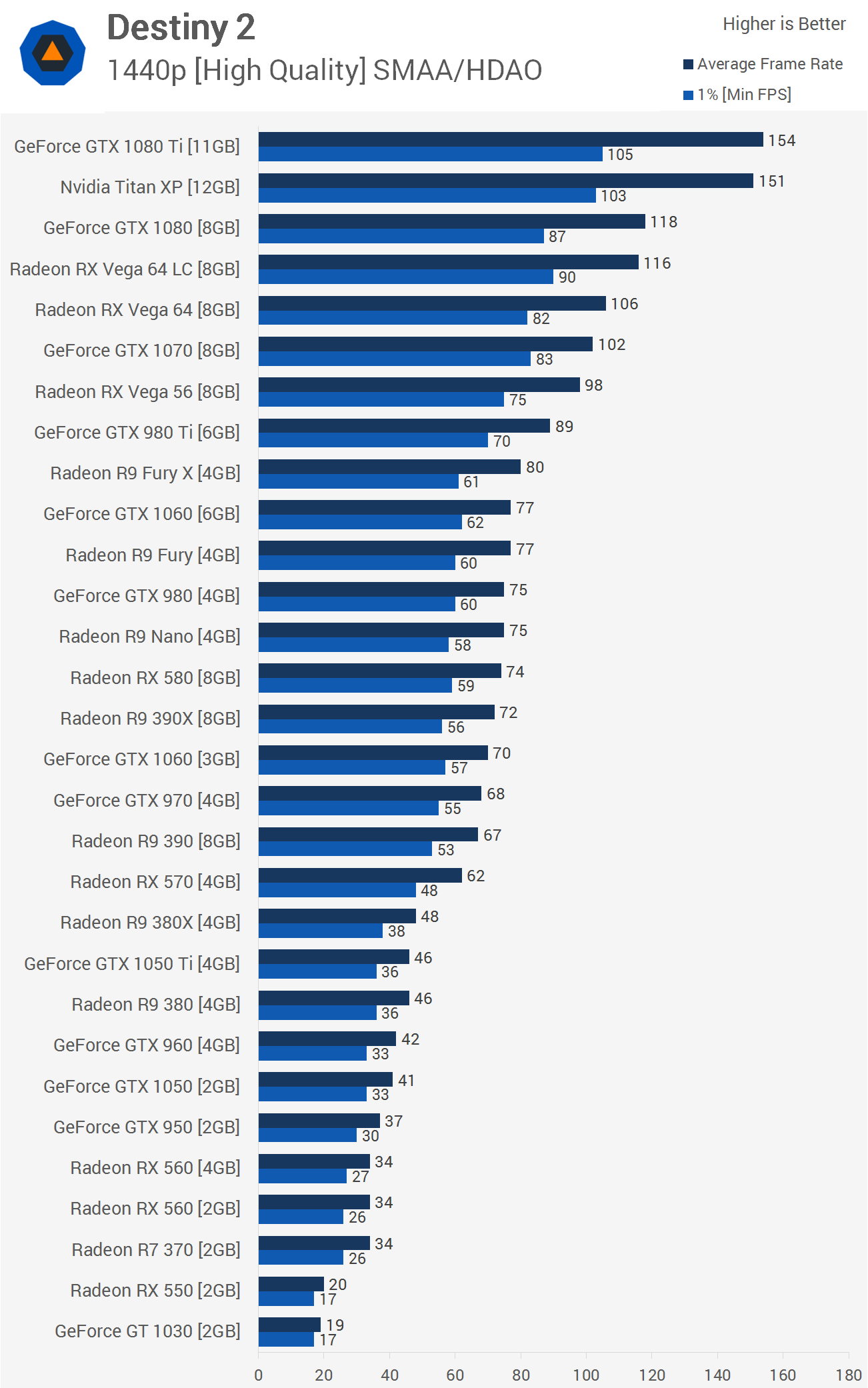

We see a wider spread of results at 1440p and again there are a huge amount of GPUs capable of pushing over 60fps. Those seeking 100fps or greater will either need a high-end GPU or will have to back the quality preset down to medium.

Increasing the resolution to 1440p greatly reduces the margins and now just three frames stand between the GTX 1060 and RX 580, both of which provided excellent performance at this reasonably high resolution. For an average of around 100fps you will want to seek out a GTX 1070 or Vega 56 GPU and to reach well beyond 100fps the GTX 1080 Ti will be required.

The Fury X series starts to recover as well, though even here the Fury X is well down on the GTX 980 Ti. Meanwhile the GTX 970 edged ahead of the R9 390, though it has to be said even here both provided exceptionally smooth frame rates.

Quite shockingly, even the GeForce GTX 950 managed to keep frame rates above 30fps for a console-ish feel, though the graphics and resolution were considerably better.

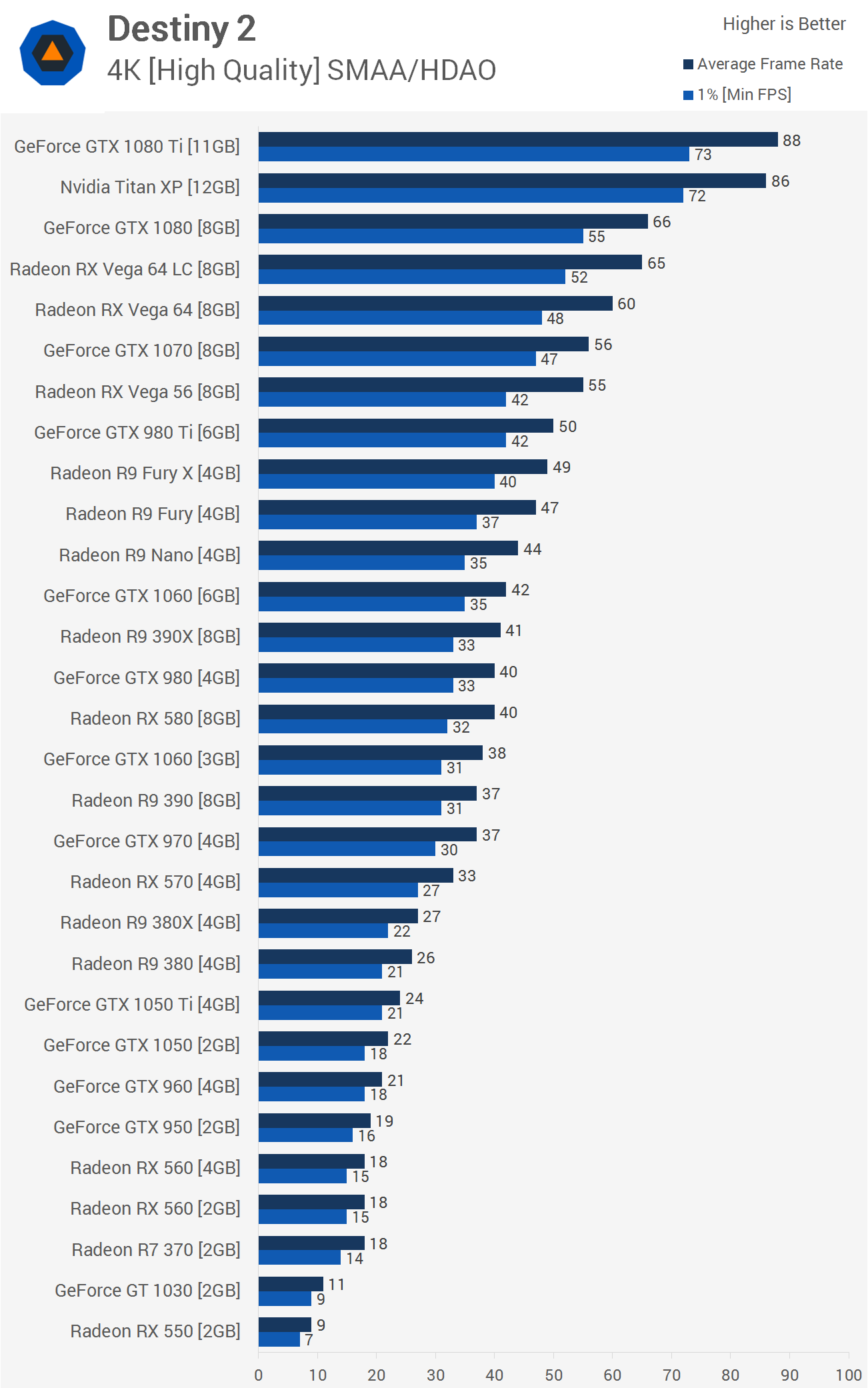

Finally, those wanting to play at 4K with high quality settings and still average at least 60fps... your options are even more limited. The best value choice here is clearly the GTX 1080, but damn the Ti version was nice in this title at 4K.

As expected, folks with an older GPU will ideally want a previous generation flagship part such as the GTX 980 Ti or Fury X. The mid-range to high-end models such as the GTX 970 and R9 390 did well, but you'll probably want more horsepower for 4K gaming.

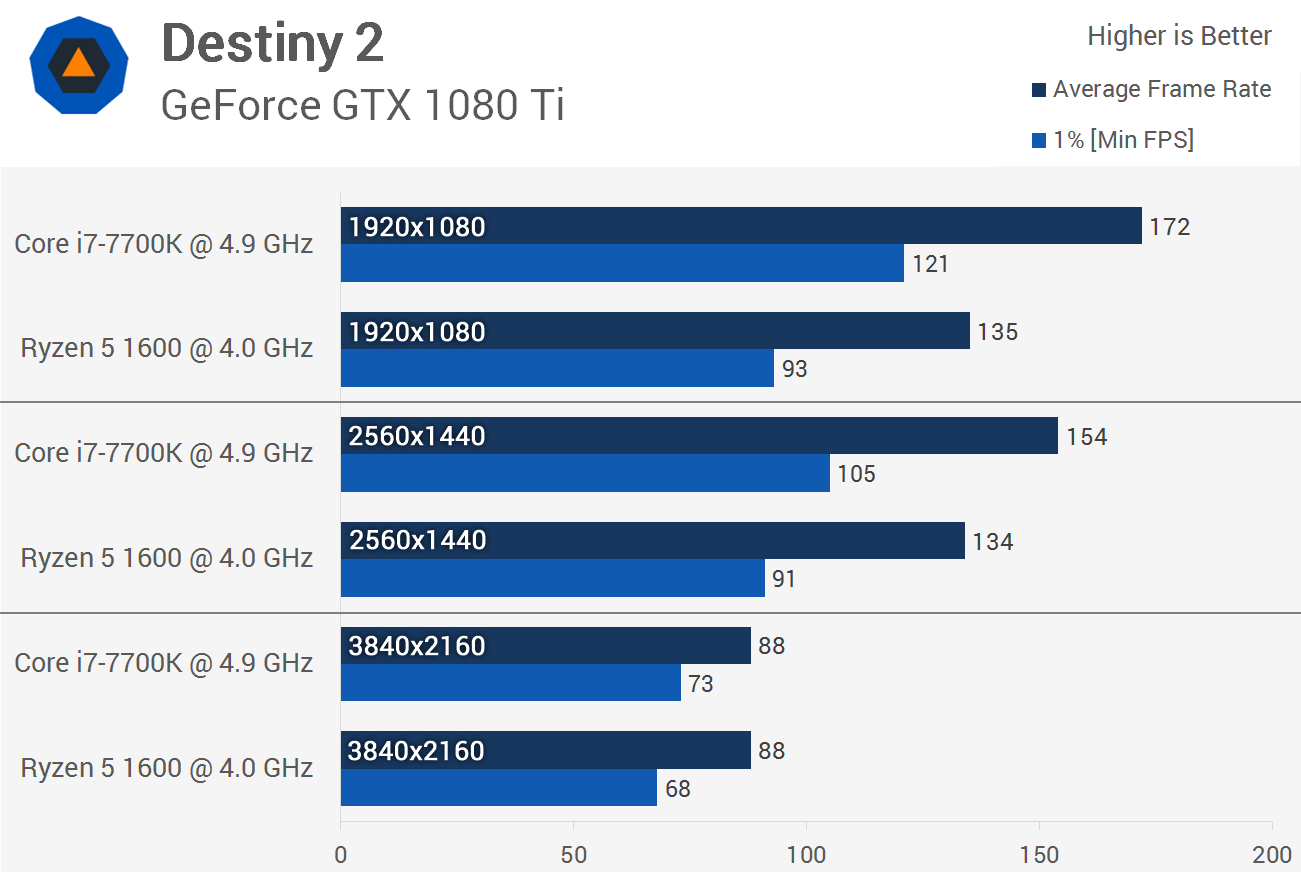

Before wrapping this up I tossed the GTX 1080 Ti into both the 7700K and Ryzen 5 1600 test systems to see how they compare. These results are only from the first 60 seconds of the single-player portion and I am aware larger open sections of the game as well as multiplayer modes will be more CPU demanding, but until the game is officially released I can't test that, so this will have to do.

As expected, at 4K we run into a massive GPU bottleneck and performance is much the same, though the 7700K did still maintain better 1% low results. Moving to 1440p the Intel CPU offered 15% greater performance and this margin was blown out to 27% at 1080p. Utilization was good on both GPUs and the game did evenly distribute load across the R5 1600's cores, it just didn't give it enough work to warrant having all those extra cores for this title.

As we said earlier, you can expect more in-depth coverage of Destiny 2's performance when the game actually launches.

Final Thoughts

Well I have to say that although I haven't had a chance to check out the multiplayer action yet, I was pleasantly surprised with what I found in the beta experience of Destiny 2. The game looks great, plays well and it's already significantly more polished than most of the triple-A titles I tested last year, so hats off to the developers over at Bungie.

For single-player, a mid-range GPU will have you more than covered at 1080p using high quality settings and many cards will even provide a smooth 60fps at 1440p. AMD's GPUs lag behind quite a bit at 1080p but come alive at 1440p and 4K where they were very competitive.

Speaking of 4K, did you see the GTX 970 going toe to toe with the R9 390 with 37fps on average at this resolution? Wasn't the 970 and its measly 3.5GB frame buffer meant to be obsolete and a heap of rubbish by now? Maybe I have my dates mixed up... anyway, the extremely popular Maxwell based GPU is still trucking along just fine and so too is the R9 390.

Further down the food chain, the R9 380 had its way with the GTX 960 while the GTX 950 easily dispatched the R7 370. At the complete opposite end of the spectrum, the GTX 980 Ti once again proved to be far too powerful for the Fury X.

As for current generation GPUs, if you're lucky enough to own a GTX 1060 or RX 580 then you should be able to take advantage of what Destiny 2 has to offer. For the single player portion of the game, as long as you have a CPU that's better than say an FX-series or an old Core i3 then you'll be fine. All of the Ryzen CPUs held their own and as did the newer Intel dual-core chips with Hyper-Threading.

The latest Core i3s will see utilization hitting around 80% and this was also true for the Pentium G4560 while the Ryzen 3 1200 was around 60-70% and the Ryzen 5 1400 only saw around 50% load.

When it came to memory, we were only seeing around 2.3GB/s of VRAM allocated at 1440p and this explains why the 3GB GTX 1060 does so well, along with the GTX 970. Even at 4K we are only starting to get to the limits of the 3GB buffer but of course these GPUs run out of processing power before the memory buffer capacity becomes an issue. As for system memory or RAM, it looks like 8GB will have you covered as the game occupies around 5-6GB.

Shopping shortcuts:

Overall, Destiny 2 is shaping up to be great looking game that appears well optimized for the PC. Now we just have to wait for the retail version to arrive in a couple of months for a more detailed look at its multiplayer performance on enthusiast hardware.